The Heart of the Code Review: My Experience With Quality and Culture

Code review has always been a sign of a healthy team and our last line of defense against expensive mistakes, whether we used paper in the 1970s or AI tools today. It reminds me that responsibility and teamwork have always mattered in this process.

Code review has always been a sign of a healthy team and our last line of defense against expensive mistakes, whether we used paper in the 1970s or AI tools today.

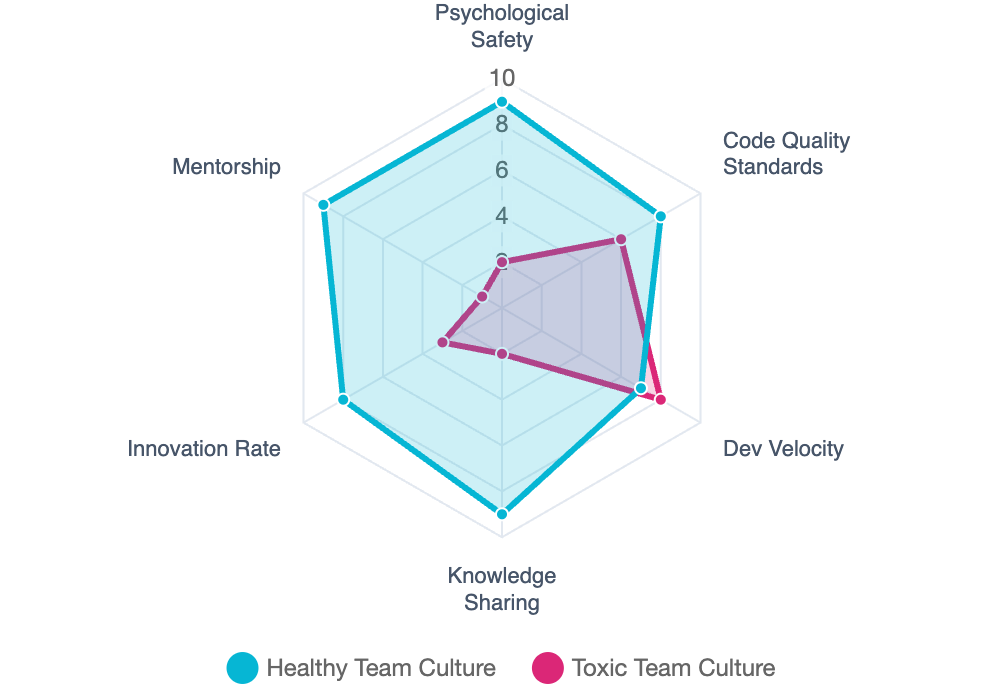

Even now, I still get a little nervous when I submit new code, knowing others will check my work and ideas. Many people feel the same way. Some see code reviews as just a way to catch bugs or complete a technical step. But after seeing this process change over time, I’ve learned it means much more. Code reviews reveal how strong our team is. They show our strengths, our blind spots, and how much we trust each other.

From Paper Stacks to Pull Requests: How We Got Here

I wasn’t there for the very beginning, but learning about the early days of coding has always given me a stronger appreciation for how far our processes have come. In the 1970s, IBM teams used Fagan Inspections, in which people sat together and silently examined stacks of printed code line by line, searching for mistakes. (Akinola & Osofisan, 2009)

When I think about those stories, I see how hard that work must have been. Learning about these early ways of working shapes how I do code reviews now. It reminds me that responsibility and teamwork have always mattered in this process.

When I started my career, we were in the early days of digital work, emailing code snippets back and forth. This was better than before, but still not very efficient. Tools like Git and GitHub, along with the pull request, made it possible to work together in real time and provide feedback on specific lines of code.

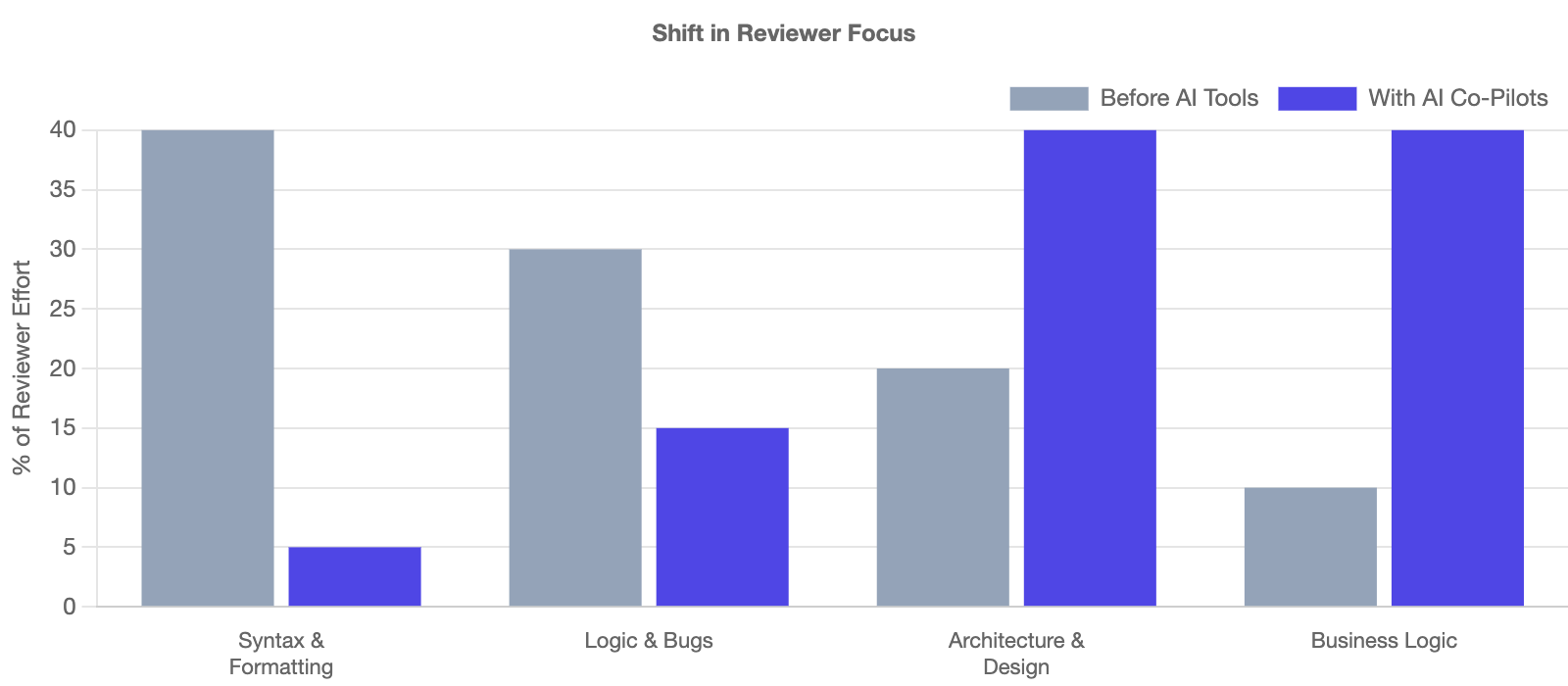

Today, we use automated linters to catch minor issues such as typos or formatting errors. This allows us to focus on more important questions: Does the code fix the user's problem? Will it continue to work well in the future?

Responsibility and teamwork have always mattered in this process.

Why I Believe in the Review Process

People sometimes ask why we go through this extra step. Wouldn’t it be faster to let AI handle it and just ship the code? In my experience, the benefits are worth the extra time.

1. The Last Line of Defense

We all get "code blind." After staring at a problem for hours, I can miss simple mistakes. Having someone else check my work is our team’s safety net. It helps catch bugs before they turn into costly problems for our customers.

A logic flaw in a smart contract function allowed an attacker to drain $196 million in crypto assets from Euler Finance in 2023. In 2024, a configuration logic error in CrowdStrike triggered a global Windows outage, resulting in estimated economic losses of over $5 billion. And in 2025, an AI-generated code, reviewed by zero humans, caused a 9-hour checkout blackout during a peak seasonal sale. (Euler Finance Exploited with Flash Loan Attack Resulting in Loss of $196 Million, 2023)

2. A Personal Classroom

I learned a lot from code reviews, especially when I was new. Senior teammates often showed me better ways to write code. Now, I try to share what I’ve learned with others. Reviews help spread knowledge, so it doesn’t stay with just one person. We call this lowering the "bus factor." (Jabrayilzade et al., 2022)

3. Finding Our Rhythm

When we review each other’s work, it helps us be on the same page. We set standards together, making the entire codebase easier to understand. It also gives us a feeling of shared ownership. To build this sense of shared responsibility, try rotating the code reviewer role in team members. This way, everyone gets to give and receive feedback, which builds empathy and understanding. Also, holding regular retrospectives to discuss these reviews can help everyone feel responsible for maintaining high code quality.

Bikeshedding, also known as Parkinson's law of triviality, describes the tendency to devote disproportionate time to trivial matters while neglecting important ones. This concept is illustrated by the example of a committee spending more time discussing the materials for a bicycle shed than the design of a nuclear power plant. - source: Wikipedia.org

Managing the Dark Side: When Reviews Go Wrong

Not every review is easy or enjoyable. Sometimes, the process can go wrong, and it often starts with one of these problems:

- The "Nitpicking" Trap: I’ve seen people spend an hour arguing about spaces versus tabs. This hurts morale. If a tool can check it, let the tool handle it. I’ve learned we should focus on bigger things, like the system’s design or main business logic, where human insight matters most. This is similar to "bike shedding," where teams spend too much time on small details and miss more important issues.

- The Ego Bruise: In the heat of a deadline, it’s remarkably easy for a technical critique to feel personal. I’ve had to learn (and teach) that we are critiquing the code, not the coder. When we lose that distinction, people stop taking risks and start hiding their mistakes.

- The Gatekeeper Problem: Sometimes, one senior developer becomes a bottleneck, insisting that everything be done exactly their way. This suppresses creativity and makes the rest of the team feel like they’re just "typing for the boss" rather than contributing.

Our New AI Co-Pilots: Friend or Foe?

Now, AI is part of my daily work. We use it to summarize changes, suggest fixes, and spot possible bugs. It’s fast, but it also brings risks. To keep things clear, we have rules: no schema edits without a human sign-off, and all AI-suggested changes need a final human review before merging. Routine tasks like syntax fixes, formatting, and initial bug checks are handled by AI. More complex work, like understanding business logic, making sure code meets user needs, and checking the overall impact of changes, stays with Developers. By making these roles clear, we avoid confusion and make sure everyone knows when human review is needed.

The Good: AI does not ever get tired. It can review thousands of lines of code in seconds and always follows the rules. Tools like CodeRabbit and Qodo, which used to be Codium, are now our main first reviewers. They do more than catch typos; they check logic across files and can even suggest fixes ready to merge. New features in GitHub Copilot and Gemini Code Assist also summarize big changes in plain English, saving us hours of work.

The Risk: What worries me most is what I call "The Silence of the Devs." If we let AI handle all the feedback, we stop talking to each other. We lose the chance to mentor and explain the reasons behind our choices. If we just accept AI suggestions without thinking, we could end up with a fragile system that falls apart later.

My Reviewer's Checklist: Keeping the Human in the Loop

Even though AI takes care of the small stuff, I still use a mental checklist to make sure I'm adding real value as a human reviewer. Here's what I focus on:

- Validate the Business Why: Does this code actually solve the user’s problem, or is it just technically correct? AI understands code, but it doesn’t always understand our customers.

- Ensure Architectural Fit: Does this change fit our long-term plan, or is it a shortcut that could become technical debt later?

- Identify Security & Edge Cases: AI is good at finding common issues, but it can miss hidden security problems or what happens if the database fails. I always ask myself, "What's the worst thing that could happen here?"

- Increase Readability: If I look at this code six months from now, will I understand it? If the logic is too tricky, I ask for clear variable names, a comment, or a simpler approach.

- Mentorship Opportunity: Is there a chance to teach something here? Instead of just saying "change this," I try to discuss why another way might work better.

Conclusion: It’s Always About People

After all these years in development, I’ve learned that code review isn’t simply a technical task. It’s really about people. We shouldn’t try to replace human decision-making with machines. Instead, we should use our tools to make our interactions more meaningful.

Whether we use paper or AI, the core of code review stays the same: aiming for excellence, being open to learning, and building a culture of respect. Remember, 'People first, code follows.'

References

(March 12, 2023). Euler Finance Exploited with Flash Loan Attack Resulting in Loss of $196 Million. Distributed Networks Institute. https://dn.institute/research/cyberattacks/incidents/2023-03-13-euler-finance/

Jabrayilzade, E., Evtikhiev, M., Tüzün, E. & Kovalenko, V. (2022). Bus Factor In Practice. IEEE/ACM 44th International Conference on Software Engineering: Software Engineering in Practice (ICSE-SEIP). https://doi.org/10.48550/arXiv.2202.01523

Wiginton, H. (2025). AI Code Reviewers: Your New Teammate or Your Replacement?. Medium. https://medium.com/@hackastak/ai-code-reviewers-your-new-teammate-or-your-replacement-cc2bf3fb53a2

Akinola, O. S. & Osofisan, A. O. (2009). An Empirical Comparative Study of Checklist based and Ad Hoc Code Reading Techniques in a Distributed Groupware Environment. arXiv:0909.4260. https://doi.org/10.48550/arXiv.0909.4260