The AI-Human Workflow Matrix: A Guide for Modern Development Teams

As we built modern, efficient teams, our true goal emerged: work smarter while retaining the personal touch that makes our products special and delights our customers.

To address these needs, we created the AI-Human Workflow Matrix. This structured approach helps us decide when to assign tasks to AI rather than rely on human expertise. The matrix divides our work into three distinct zones: tasks for full AI automation, those best tackled through human-AI collaboration, and tasks that require critical human involvement. By defining these zones upfront, we stay aligned, leverage AI, and preserve the unique human qualities of our products.

We make these choices based on two main factors: the technical complexity of a task and the amount of context it requires.

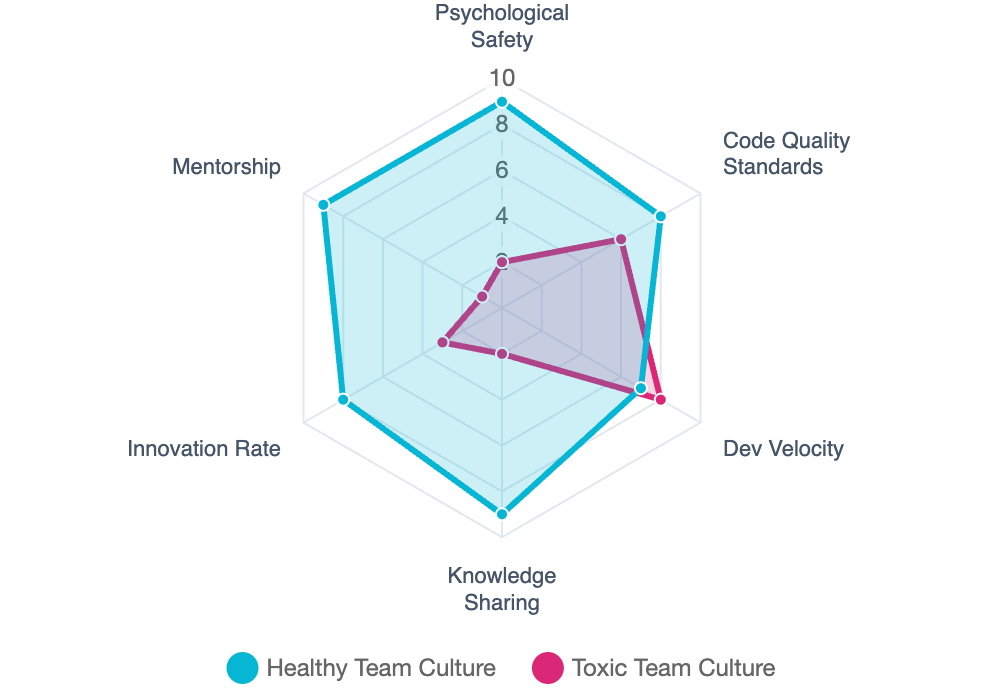

By mapping our daily tasks this way, we stay efficient and maintain a strong team culture.

The Who-Does-What Guide

We use a straightforward decision matrix to sort our work and keep things clear.

| Task Type | Handled By | Why? |

|---|---|---|

| Syntax & Style | AI (100%) | These are rule-based tasks. |

| PR Summarization | AI (Primary) | AI is great at the "what," but we still verify the "why". |

| Logic & Bug Hunting | Collaborative | AI finds common patterns; we find the subtle bugs that require intent. |

| Architecture | Human (Primary) | Only we know the 12-month roadmap and the history of technical debt. |

| Mentorship | Human (100%) | AI can fix code, but it cannot "grow" a developer. |

| Security Scanning | AI (First Pass) | AI can scan massive databases of known threats faster than any of us. |

Finding the Right "Zone" for Every Task

I’ve divided our work into three clear zones. This helps us focus on where we make the biggest impact.

1. The AI Zone

Here, our goal is simple: automate any task that has a clear, correct answer. We let the AI handle these jobs. When I see a task that fits this criterion, I immediately move it to the AI list. This keeps our workflow streamlined and efficient.*

- Linting and Formatting: Enforcing our team's style guide.

- Boilerplate Generation: Creating shells for unit tests or basic API scaffolding.

- Documentation: Generating initial README summaries and docstrings.

2. The Collaborative Zone

In this zone, we treat AI as a powerful assistant. We work together as partners:

- Performance Optimization: The AI might suggest a faster algorithm, but we decide if the added complexity is actually worth it.

- Refactoring: The AI suggests "cleaner" code, and we make sure it doesn't break external dependencies the AI can't see.

- Agentic: Agentic AI significantly assists developers by creating autonomous agents that plan, write, test, and modify code with minimal developer intervention. Shifting our role to more of an orchestration.

3. The Critical Thinking Zone

This is where we focus on what matters most to our product and our team. Only we handle these tasks:

- The Business "Why": We ask if a feature actually solves the customer’s problem.

- Edge Case Strategy: We decide how the system should fail gracefully during an outage.

- Ethics & Fairness: We audit algorithms for bias or intellectual property concerns.

Also Read: The Heart of the Code Review.

When to Apply the Brakes: The "Red Flag" Rule

Even if a task seems ideal for AI, our "Red Flag" rule guides us. If any of the points below apply during the pre-task review, the developer tags the task as requiring human oversight. The developer is responsible for ensuring that flagged tasks are manually reviewed before approval, rather than relying on AI automation. This ensures that the process for handling Red Flags is both clear and actionable: check for Red Flags, tag the task, and follow the manual review process.

- High Stakes: Anything involving billing, personal data (PII), or core security.

- Novelty: Brand-new features or architectures that don’t have existing patterns to follow.

- Ambiguity: "Fuzzy" requirements based on a meeting conversation rather than written documentation.

How to Use This Checklist in Your Workflow

I've learned that the best way to remember these flags is to make them part of my daily routine:

- The Pre-Flight Check: Before starting a task, a developer quickly reviews this list. If they spot a "Red Flag," they tag the task as "Human-Critical" in our project management tool - Jira.

- The "Red Flag" Label: When someone submits a Pull Request, they add the "Red Flag" label if the task involves a Red Flag area. The reviewer then knows to bypass AI-generated summaries and perform a thorough manual review, as required by the Red Flag process. This step ensures that flagged tasks always receive the necessary human attention before approval.

- The Monthly Retrospective: Each month, we meet to ask, "Did a Red Flag help us catch a mistake?" or "Should we add a new flag because of a bug we missed?"

How We Make It Work Every Day

To make sure this model works, we stick to a few simple tips:

- Audit the AI: Each month, we peer-review three AI suggestions to ensure it isn’t picking up bad habits. These suggestions are randomly selected from a pool of AI-generated outputs from the past month to ensure a fair representation of different task types and complexities. To turn these audits into learning moments, auditors are encouraged to record one insightful observation per review. These insights are then shared during our retrospectives, transforming compliance into opportunities for collective learning. Capturing these 'what surprised us' notes helps us to build expertise over time.

- Human-First Comments: During reviews, we ensure we leave at least one human comment, such as praise or a question about intent, to maintain a strong social connection.

- Don't Ghost the AI: If the AI makes a mistake, we fix it. This prevents errors from piling up and causing bigger problems later. To enhance this process, we propose maintaining an error log to document recurring AI mistakes. By tracking these issues, our team can identify patterns, make necessary adjustments, and improve our workflow and AI performance over time.

By using this matrix, we let technology handle what it’s best at, so we can focus on the work that needs our unique skills. We can confidently embrace the future of AI, knowing that we are staying firmly in the pilot's seat to deliver products that are both technically precise and human-centered.